10.查看状态。

[root@localhost ~]# cat /proc/mdstat Personalities : [raid6] [raid5] [raid4] md6 : active raid6 sdg1[5] sdf1[4] sde1[3] sdd1[2] sdc1[1](F) sdb1[0](F) 41906176 blocks super 1.2 level 6, 512k chunk, algorithm 2 [4/2] [__UU] [====>................] recovery = 23.8% (4993596/20953088) finish=1.2min speed=208066K/sec unused devices: <none> [root@localhost ~]# mdadm -D /dev/md6 /dev/md6: Version : 1.2 Creation Time : Sun Aug 25 16:34:36 2019 Raid Level : raid6 Array Size : 41906176 (39.96 GiB 42.91 GB) Used Dev Size : 20953088 (19.98 GiB 21.46 GB) Raid Devices : 4 Total Devices : 6 Persistence : Superblock is persistent Update Time : Sun Aug 25 16:41:09 2019 State : clean, degraded, recovering Active Devices : 2 Working Devices : 4 Failed Devices : 2 Spare Devices : 2 Layout : left-symmetric Chunk Size : 512K Consistency Policy : resync Rebuild Status : 13% complete Name : localhost:6 (local to host localhost) UUID : 7c3d15a2:4066f2c6:742f3e4c:82aae1bb Events : 27 Number Major Minor RaidDevice State 5 8 97 0 spare rebuilding /dev/sdg1 4 8 81 1 spare rebuilding /dev/sdf1 2 8 49 2 active sync /dev/sdd1 3 8 65 3 active sync /dev/sde1 0 8 17 - faulty /dev/sdb1 1 8 33 - faulty /dev/sdc111.再次查看状态。

[root@localhost ~]# cat /proc/mdstat Personalities : [raid6] [raid5] [raid4] md6 : active raid6 sdg1[5] sdf1[4] sde1[3] sdd1[2] sdc1[1](F) sdb1[0](F) 41906176 blocks super 1.2 level 6, 512k chunk, algorithm 2 [4/4] [UUUU] unused devices: <none> [root@localhost ~]# mdadm -D /dev/md6 /dev/md6: Version : 1.2 Creation Time : Sun Aug 25 16:34:36 2019 Raid Level : raid6 Array Size : 41906176 (39.96 GiB 42.91 GB) Used Dev Size : 20953088 (19.98 GiB 21.46 GB) Raid Devices : 4 Total Devices : 6 Persistence : Superblock is persistent Update Time : Sun Aug 25 16:42:42 2019 State : clean Active Devices : 4 Working Devices : 4 Failed Devices : 2 Spare Devices : 0 Layout : left-symmetric Chunk Size : 512K Consistency Policy : resync Name : localhost:6 (local to host localhost) UUID : 7c3d15a2:4066f2c6:742f3e4c:82aae1bb Events : 46 Number Major Minor RaidDevice State 5 8 97 0 active sync /dev/sdg1 4 8 81 1 active sync /dev/sdf1 2 8 49 2 active sync /dev/sdd1 3 8 65 3 active sync /dev/sde1 0 8 17 - faulty /dev/sdb1 1 8 33 - faulty /dev/sdc112.移除损坏的磁盘。

[root@localhost ~]# mdadm -r /dev/md6 /dev/sd{b,c}1 mdadm: hot removed /dev/sdb1 from /dev/md6 mdadm: hot removed /dev/sdc1 from /dev/md6 [root@localhost ~]# mdadm -D /dev/md6 /dev/md6: Version : 1.2 Creation Time : Sun Aug 25 16:34:36 2019 Raid Level : raid6 Array Size : 41906176 (39.96 GiB 42.91 GB) Used Dev Size : 20953088 (19.98 GiB 21.46 GB) Raid Devices : 4 Total Devices : 4 Persistence : Superblock is persistent Update Time : Sun Aug 25 16:43:43 2019 State : clean Active Devices : 4 Working Devices : 4 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 512K Consistency Policy : resync Name : localhost:6 (local to host localhost) UUID : 7c3d15a2:4066f2c6:742f3e4c:82aae1bb Events : 47 Number Major Minor RaidDevice State 5 8 97 0 active sync /dev/sdg1 4 8 81 1 active sync /dev/sdf1 2 8 49 2 active sync /dev/sdd1 3 8 65 3 active sync /dev/sde113.重新添加热备份盘。

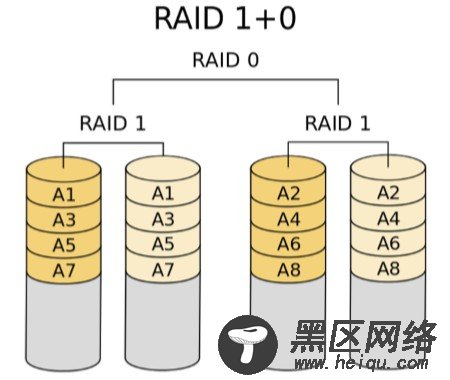

[root@localhost ~]# mdadm -a /dev/md6 /dev/sd{b,c}1 mdadm: added /dev/sdb1 mdadm: added /dev/sdc1 [root@localhost ~]# mdadm -D /dev/md6 /dev/md6: Version : 1.2 Creation Time : Sun Aug 25 16:34:36 2019 Raid Level : raid6 Array Size : 41906176 (39.96 GiB 42.91 GB) Used Dev Size : 20953088 (19.98 GiB 21.46 GB) Raid Devices : 4 Total Devices : 6 Persistence : Superblock is persistent Update Time : Sun Aug 25 16:44:01 2019 State : clean Active Devices : 4 Working Devices : 6 Failed Devices : 0 Spare Devices : 2 Layout : left-symmetric Chunk Size : 512K Consistency Policy : resync Name : localhost:6 (local to host localhost) UUID : 7c3d15a2:4066f2c6:742f3e4c:82aae1bb Events : 49 Number Major Minor RaidDevice State 5 8 97 0 active sync /dev/sdg1 4 8 81 1 active sync /dev/sdf1 2 8 49 2 active sync /dev/sdd1 3 8 65 3 active sync /dev/sde1 6 8 17 - spare /dev/sdb1 7 8 33 - spare /dev/sdc1 RAID10#RAID10是先将数据进行镜像操作,然后再对数据进行分组,RAID1在这里就是一个冗余的备份阵列,而RAID0则负责数据的读写阵列。至少要四块盘,两两组合做RAID1,然后做RAID0,RAID10对存储容量的利用率和RAID1一样低,只有50%。Raid10方案造成了50%的磁盘浪费,但是它提供了200%的速度和单磁盘损坏的数据安全性,并且当同时损坏的磁盘不在同一RAID1中,就能保证数据安全性,RAID10能提供比RAID5更好的性能。这种新结构的可扩充性不好,使用此方案比较昂贵。

实验:RAID10创建,格式化,挂载使用,故障模拟,重新添加热备份。