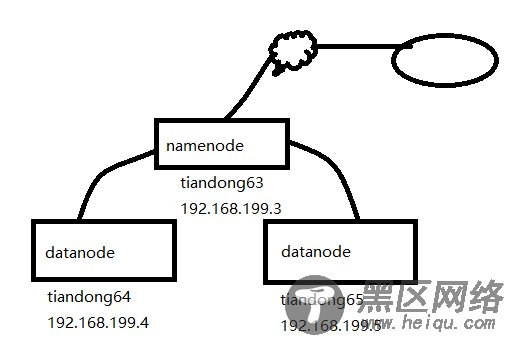

部署Hadoop高性能集群:

拓扑图:

一、实验前期环境准备:

1、三台主机配置hosts文件:(复制到另外两台主机上)

[root@tiandong63 ~]# more /etc/hosts

192.168.199.3 tiandong63

192.168.199.4 tiandong64

192.168.199.5 tiandong65

2、创建Hadoop账号(另外两台主机上都的创建)

[root@tiandong63 ~]#useradd -u 8000 hadoop

[root@tiandong63 ~]#echo '123456' | passwd --stdin hadoop

3、给hadoop用户增加sudo权限,增加内容(另外两台主机上都得配置)

[root@tiandong63 ~]# vim /etc/sudoers

hadoop ALL=(ALL) ALL

4、主机互信(在tiandong63主机上)

可以ssh无密码登录机器tiandong63,tiandong64,tiandong65 ,方便后期复制文件和启动服务。因为namenode启动时,会连接到datanode上启动对应的服务。

[root@tiandong63 ~]# su - hadoop

[hadoop@tiandong63 ~]$ ssh-keygen

[hadoop@tiandong63 ~]$ ssh-copy-id root@192.168.199.4

[hadoop@tiandong63 ~]$ ssh-copy-id root@192.168.199.5

二、配置hadoop环境

1、配置jdk环境:(三台都的配置)

[root@tiandong63 ~]# ll jdk-8u191-linux-x64.tar.gz

-rw-r--r-- 1 root root 191753373 Dec 30 00:58 jdk-8u191-linux-x64.tar.gz

[root@tiandong63 ~]# tar zxvf jdk-8u191-linux-x64.tar.gz -C /usr/local/src/

[root@tiandong63 ~]# vim /etc/profile

export Java_HOME=/usr/local/src/jdk1.8.0_191

export JAVA_BIN=/usr/local/src/jdk1.8.0_191/bin

export PATH=${JAVA_HOME}/bin:$PATH

export CLASSPATH=.:${JAVA_HOME}/lib/dt.jar:${JAVA_HOME}/lib/tools.jar

export hadoop_root_logger=DEBUG,console

[root@tiandong63 ~]# source /etc/profile

2、关闭防火墙(三台都的关闭)

[root@tiandong63 ~]# /etc/init.d/iptables stop

3、在tiandong63安装Hadoop 并配置成namenode主节点

[root@tiandong63 ~]# cd /home/hadoop/

[root@tiandong63 hadoop]# ll hadoop-2.7.7.tar.gz

-rw-r--r-- 1 hadoop hadoop 218720521 Dec 29 21:11 hadoop-2.7.7.tar.gz

[root@tiandong63 ~]# su - hadoop

[hadoop@tiandong63 ~]$ tar -zxvf hadoop-2.7.7.tar.gz

创建hadoop相关的工作目录:

[hadoop@tiandong63 ~]$ mkdir -p /home/hadoop/dfs/name/ /home/hadoop/dfs/data/^Chome/hadoop/tmp/

4、配置hadoop(修改7个配置文件)

文件名称:hadoop-env.sh、yarn-evn.sh、slaves、core-site.xml、hdfs-site.xml、mapred-site.xml、yarn-site.xml

[hadoop@tiandong63 ~]$ cd /home/hadoop/hadoop-2.7.7/etc/hadoop/

[hadoop@tiandong63 hadoop]$ vim hadoop-env.sh 指定hadoop的Java运行环境

25 export JAVA_HOME=/usr/local/src/jdk1.8.0_191

[hadoop@tiandong63 hadoop]$ vim yarn-env.sh 指定yarn框架的java运行环境

26 JAVA_HOME=/usr/local/src/jdk1.8.0_191

[hadoop@tiandong63 hadoop]$ vim slaves 指定datanode 数据存储服务器

tiandong64

tiandong65

[hadoop@tiandong63 hadoop]$ vim core-site.xml 指定访问hadoop web界面访问路径

19 <configuration>

20 <property>

21 <name>fs.defaultFS</name>

22 <value>hdfs://tiandong63:9000</value>

23 </property>

24

25 <property>

26 <name>io.file.buffer.size</name>

27 <value>131072</value>

28 </property>

29

30 <property>

31 <name>hadoop.tmp.dir</name>

32 <value>file:/home/hadoop/tmp</value>

33 <description>Abase for other temporary directories.</description>

34 </property>

35 </configuration>

[hadoop@tiandong63 hadoop]$ mkdir -p /home/hadoop/tmp

[hadoop@tiandong63 hadoop]$ vim hdfs-site.xml

hdfs的配置文件,dfs.http.address配置了hdfs的http的访问位置,dfs.replication配置了文件块的副本数,一般不大于从机的个数。

19 <configuration>

20 <property>

21 <name>dfs.namenode.secondary.http-address</name>

22 <value>tiandong63:9001</value>

23 </property>

24

25 <property>

26 <name>dfs.namenode.name.dir</name>

27 <value>file:/home/hadoop/dfs/name</value>

28 </property>

29 <property>

30 <name>dfs.datanode.data.dir</name>

31 <value>file:/home/hadoop/dfs/data</value>

32 </property>

33

34 <property>

35 <name>dfs.replication</name>

36 <value>2</value>

37 </property>

38

39 <property>

40 <name>dfs.webhdfs.enabled</name>

41 <value>true</value>

42 </property>

43 </configuration>

[hadoop@tiandong63 hadoop]$ vim mapred-site.xml

19 <configuration>

20 <property>

21 <name>mapreduce.framework.name</name>

22 <value>yarn</value>

23 </property>

24

25 <property>

26 <name>mapreduce.jobhistory.address</name>

27 <value>tiandong63:10020</value>

28 </property>

29

30 <property>

31 <name>mapreduce.jobhistory.webapp.address</name>

32 <value>tiandong:19888</value>

33 </property>

34 </configuration>

[hadoop@tiandong63 hadoop]$ vim yarn-site.xml 该文件为yarn框架的配置,主要是一些任务的启动位置

15 <configuration>

16

17 <!-- Site specific YARN configuration properties -->

18 <property>

19 <name>yarn.nodemanager.aux-services</name>

20 <value>mapreduce_shuffle</value>

21 </property>

22

23 <property>

24 <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

25 <value>org.apache.hadoop.mapred.ShuffleHandler</value>

26 </property>

27

28 <property>

29 <name>yarn.resourcemanager.address</name>

30 <value>tiandong63:8032</value>

31 </property>

32

33 <property>

34 <name>yarn.resourcemanager.scheduler.address</name>

35 <value>tiandong63:8030</value>

36 </property>

37

38 <property>

39 <name>yarn.resourcemanager.resource-tracker.address</name>

40 <value>tiandong63:8031</value>

41 </property>

42

43 <property>

44 <name>yarn.resourcemanager.admin.address</name>

45 <value>tiandong63:8033</value>

46 </property>

47

48 <property>

49 <name>yarn.resourcemanager.webapp.address</name>

50 <value>tiandong63:8088</value>

51 </property>

52 </configuration>