[yzyu@node1 ~]$ sudo partx -a /dev/vg1/lv1 [yzyu@node1 ~]$ sudo mkfs -t xfs /dev/vg1/lv1 [yzyu@node1 ~]$ sudo mkdir /var/local/osd1 [yzyu@node1 ~]$ sudo vi /etc/fstab /dev/vg1/lv1 /var/local/osd1 xfs defaults 0 0 :wq [yzyu@node1 ~]$ sudo mount -a [yzyu@node1 ~]$ sudo chmod 777 /var/local/osd1 [yzyu@node1 ~]$ sudo chown ceph:ceph /var/local/osd1/ [yzyu@node1 ~]$ ls -ld /var/local/osd1/ [yzyu@node1 ~]$ df -hT [yzyu@node1 ~]$ exit

配置node2节点的osd1存储设备:

[yzyu@node2 ~]$ sudo fdisk /dev/sdc...sdc [yzyu@node2 ~]$ pvcreate /dev/sdc1 /dev/sdd1 /dev/sde1 /dev/sdf1 /dev/sdg1 /dev/sdh1 /dev/sdi1 /dev/sdj1 /dev/sdk1 /dev/sdl1 /dev/sdm1 /dev/sdn1 /dev/sdo1 /dev/sdp1 /dev/sdq1 /dev/sdr1 /dev/sds1 /dev/sdt1 /dev/sdu1 /dev/sdv1 /dev/sdw1 /dev/sdx1 /dev/sdy1 /dev/sdz1 [yzyu@node2 ~]$ vgcreate vg2 /dev/sdc1 /dev/sdd1 /dev/sde1 /dev/sdf1 /dev/sdg1 /dev/sdh1 /dev/sdi1 /dev/sdj1 /dev/sdk1 /dev/sdl1 /dev/sdm1 /dev/sdn1 /dev/sdo1 /dev/sdp1 /dev/sdq1 /dev/sdr1 /dev/sds1 /dev/sdt1 /dev/sdu1 /dev/sdv1 /dev/sdw1 /dev/sdx1 /dev/sdy1 /dev/sdz1 [yzyu@node2 ~]$ lvcreate -L 130T -n lv2 vg2 [yzyu@node2 ~]$ mkfs.xfs /dev/vg2/lv2

[yzyu@node2 ~]$ sudo partx -a /dev/vg2/lv2 [yzyu@node2 ~]$ sudo mkfs -t xfs /dev/vg2/lv2 [yzyu@node2 ~]$ sudo mkdir /var/local/osd2 [yzyu@node2 ~]$ sudo vi /etc/fstab /dev/vg2/lv2 /var/local/osd2 xfs defaults 0 0 :wq [yzyu@node2 ~]$ sudo mount -a [yzyu@node2 ~]$ sudo chmod 777 /var/local/osd2 [yzyu@node2 ~]$ sudo chown ceph:ceph /var/local/osd2/ [yzyu@node2 ~]$ ls -ld /var/local/osd2/ [yzyu@node2 ~]$ df -hT [yzyu@node2 ~]$ exit

dlp管理节点注册node节点:

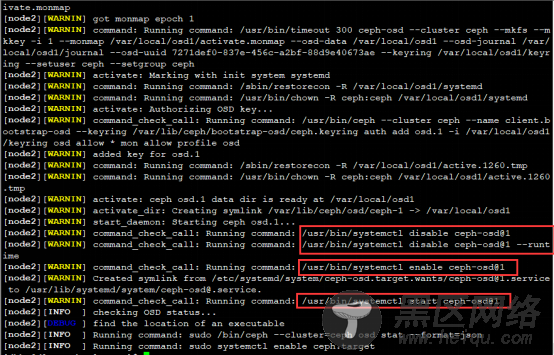

[yzyu@dlp ceph-cluster]$ ceph-deploy osd prepare node1:/var/local/osd1 node2:/var/local/osd2 ##初始创建osd节点并指定节点存储文件位置

[yzyu@dlp ceph-cluster]$ chmod +r /home/yzyu/ceph-cluster/ceph.client.admin.keyring [yzyu@dlp ceph-cluster]$ ceph-deploy osd activate node1:/var/local/osd1 node2:/var/local/osd2 ##激活ods节点

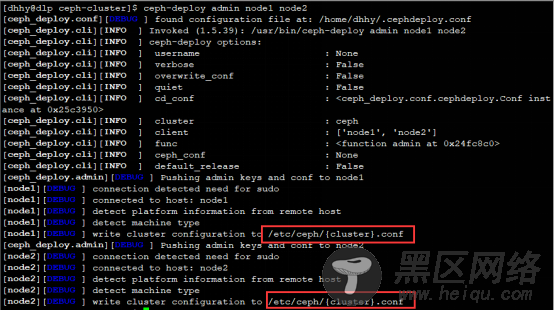

[yzyu@dlp ceph-cluster]$ ceph-deploy admin node1 node2 ##复制key管理密钥文件到node节点中

[yzyu@dlp ceph-cluster]$ sudo cp /home/dhhy/ceph-cluster/ceph.client.admin.keyring /etc/ceph/ [yzyu@dlp ceph-cluster]$ sudo cp /home/dhhy/ceph-cluster/ceph.conf /etc/ceph/ [yzyu@dlp ceph-cluster]$ ls /etc/ceph/ ceph.client.admin.keyring ceph.conf rbdmap [yzyu@dlp ceph-cluster]$ ceph quorum_status --format json-pretty ##查看Ceph群集详细信息

验证查看ceph集群状态信息

[yzyu@dlp ceph-cluster]$ ceph health

HEALTH_OK

[yzyu@dlp ceph-cluster]$ ceph -s ##查看Ceph群集状态

cluster 24fb6518-8539-4058-9c8e-d64e43b8f2e2

health HEALTH_OK

monmap e1: 2 mons at {node1=10.199.100.171:6789/0,node2=10.199.100.172:6789/0}

election epoch 6, quorum 0,1 node1,node2

osdmap e10: 2 osds: 2 up, 2 in

flags sortbitwise,require_jewel_osds

pgmap v20: 64 pgs, 1 pools, 0 bytes data, 0 objects

10305 MB used, 30632 MB / 40938 MB avail ##已使用、剩余、总容量

64 active+clean

[dhhy@dlp ceph-cluster]$ ceph osd tree

ID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY

-1 0.03897 root default

-2 0.01949 host node1

0 0.01949 osd.0 up 1.00000 1.00000

-3 0.01949 host node2

1 0.01949 osd.1 up 1.00000 1.00000

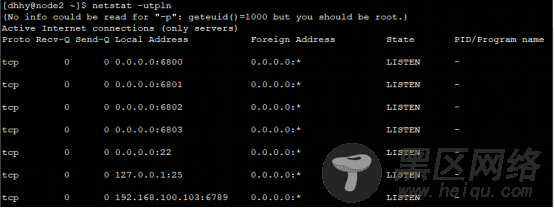

[yzyu@dlp ceph-cluster]$ ssh yzyu@node1 ##验证node1节点的端口监听状态以及其配置文件以及磁盘使用情况

[yzyu@node1 ~]$ df -hT |grep lv1