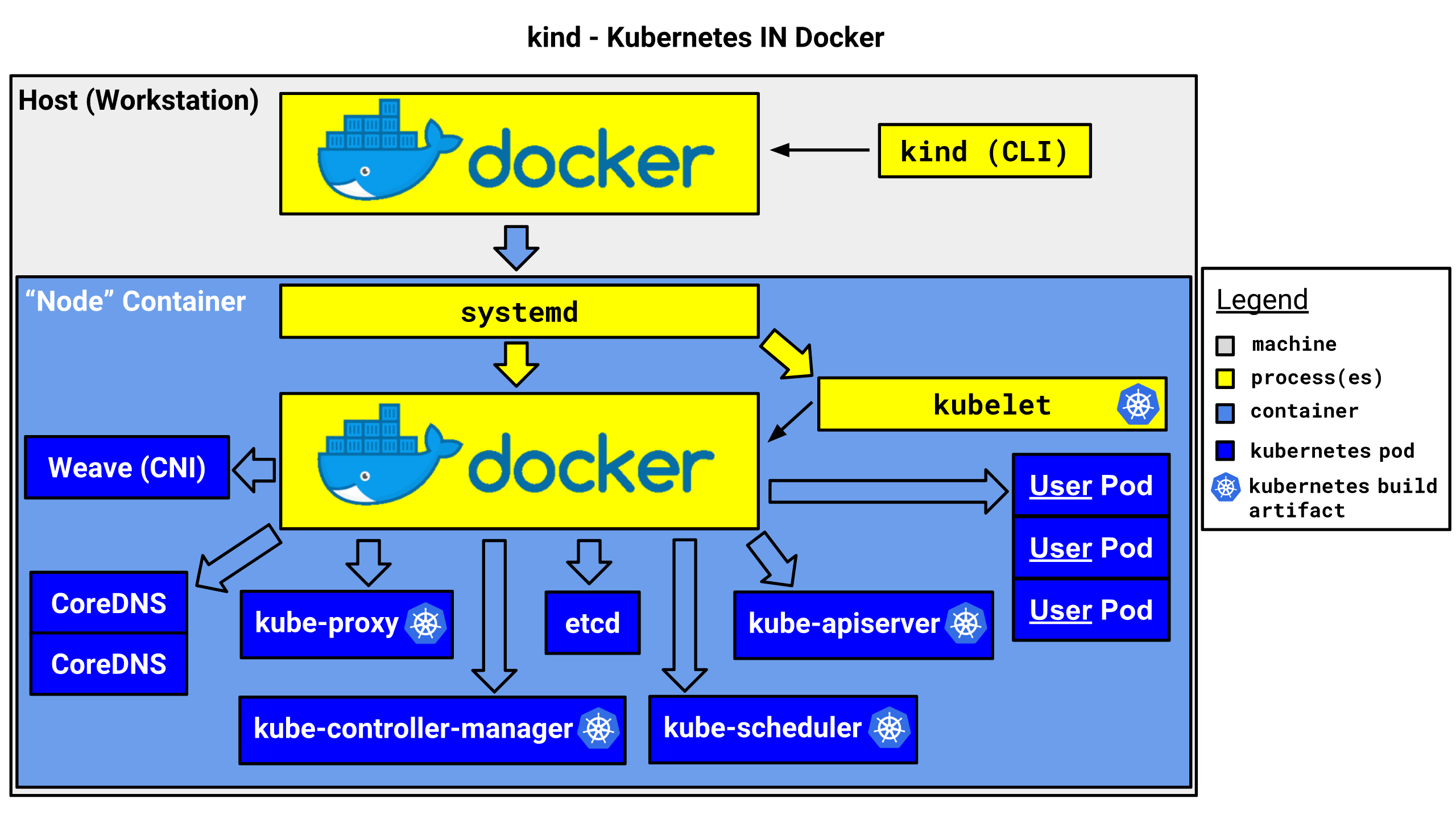

Kind是Kubernetes In Docker的缩写,顾名思义是使用Docker容器作为Node并将Kubernetes部署至其中的一个工具。官方文档中也把Kind作为一种本地集群搭建的工具进行推荐。默认情况下,Kind会先下载kindest/node镜像,该镜像包含kubernetes的主要组件,当镜像节点准备好时,Kind使用kubeadm进行集群的创建,内部使用containerd跑组件容器。最终,Kind只是为了方便测试kubernetes集群的,且不可用于生产环境。

Kind使用Golang进行开发,在仓库的Release页面,已经上传了构建好的二进制,支持多种操作系统,可直接按需下载进行使用。

wget -O /usr/local/bin/kind https://github.com/kubernetes-sigs/kind/releases/download/v0.8.1/kind-linux-amd64 && chmod +x /usr/local/bin/kind # kind v0.8.1版本支持最新kubernetes v1.18.2集群安装docker

yum-config-manager --add-repo https://mirrors.ustc.edu.cn/docker-ce/linux/centos/docker-ce.repo sed -i 's#download.docker.com#mirrors.ustc.edu.cn/docker-ce#g' /etc/yum.repos.d/docker-ce.repo yum install -y docker-ce部署kubectl

wget -O /usr/local/bin/kubctl https://storage.googleapis.com/kubernetes-release/release/v1.18.5/bin/linux/amd64/kubectl chmod +x /usr/local/bin/kubectl 创建kind单集群 使用kind命令创建使用kind create 创建集群,默认是单节点集群。

# kind create cluster --name test Creating cluster "test" ... ✓ Ensuring node image (kindest/node:v1.18.2) ✓ Preparing nodes ✓ Creating kubeadm config ✓ Starting control-plane ️ ✓ Installing CNI ✓ Installing StorageClass Cluster creation complete. You can now use the cluster with: export KUBECONFIG="$(kind get kubeconfig-path --name="test")" kubectl cluster-info在docker环境下,会启动一个镜像

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 2e0a5e15a4a0 kindest/node:v1.18.2 "/usr/local/bin/entr…" 14 minutes ago Up 14 minutes 45319/tcp, 127.0.0.1:45319->6443/tcp test-control-plane查看集群信息

export KUBECONFIG="$(kind get kubeconfig-path --name="test")" echo 'export KUBECONFIG="$(kind get kubeconfig-path --name=test)"' >> /root/.bashrc kubectl cluster-info Kubernetes master is running at https://localhost:45319 KubeDNS is running at https://localhost:45319/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'. kubectl get node -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME test-control-plane Ready master 16m v1.18.2 172.17.0.2 <none> Ubuntu Disco Dingo (development branch) 3.10.0-693.el7.x86_64 containerd://1.2.6-0ubuntu1 kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-fb8b8dccf-6r58d 1/1 Running 0 17m kube-system coredns-fb8b8dccf-bntk8 1/1 Running 0 17m kube-system etcd-test-control-plane 1/1 Running 0 17m kube-system ip-masq-agent-qww8n 1/1 Running 0 17m kube-system kindnet-vbz6w 1/1 Running 0 17m kube-system kube-apiserver-test-control-plane 1/1 Running 0 16m kube-system kube-controller-manager-test-control-plane 1/1 Running 0 17m kube-system kube-proxy-wf7dq 1/1 Running 0 17m kube-system kube-scheduler-test-control-plane 1/1 Running 0 16m启动nginx app

kubectl run nginx --image nginx:1.17.0-alpine --restart=Never --port 80 --labels="app=nginx-test" kubectl port-forward --address 0.0.0.0 pod/nginx 8080:80 curl localhost:8080 指定配置文件创建 # cat kube-config.yaml kind: Cluster apiVersion: kind.sigs.k8s.io/v1alpha3 kubeadmConfigPatches: - | apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration metadata: name: config networking: serviceSubnet: 10.0.0.0/16 imageRepository: registry.aliyuncs.com/google_containers nodeRegistration: kubeletExtraArgs: pod-infra-container-image: registry.aliyuncs.com/google_containers/pause:3.1 - | apiVersion: kubeadm.k8s.io/v1beta2 kind: InitConfiguration metadata: name: config networking: serviceSubnet: 10.0.0.0/16 imageRepository: registry.aliyuncs.com/google_containers nodes: # 指定节点,默认是一个节点 - role: control-plane # kind create cluster --name test2 --config kube-config.yaml Creating cluster "test2" ... ✓ Ensuring node image (kindest/node:v1.18.2) ✓ Preparing nodes ✓ Creating kubeadm config ✓ Starting control-plane ️ ✓ Installing CNI ✓ Installing StorageClass Cluster creation complete. You can now use the cluster with: export KUBECONFIG="$(kind get kubeconfig-path --name="test2")" kubectl cluster-info 创建kind-ha集群只能通过配置文件来声明ha集群配置

# cat kind-ha-config.yaml kind: Cluster apiVersion: kind.sigs.k8s.io/v1alpha3 kubeadmConfigPatches: - | apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration metadata: name: config networking: serviceSubnet: 10.0.0.0/16 imageRepository: registry.aliyuncs.com/google_containers nodeRegistration: kubeletExtraArgs: pod-infra-container-image: registry.aliyuncs.com/google_containers/pause:3.1 - | apiVersion: kubeadm.k8s.io/v1beta2 kind: InitConfiguration metadata: name: config networking: serviceSubnet: 10.0.0.0/16 imageRepository: registry.aliyuncs.com/google_containers nodes: #主要修改这个位置,增加role,指定node节点,工作节点名称必须为worker,master节点必须为control-plane - role: control-plane - role: control-plane - role: control-plane - role: worker - role: worker - role: worker # kind create cluster --name test-ha --config kind-ha-config.yaml Creating cluster "test-ha" ... ✓ Ensuring node image (kindest/node:v1.18.2) ✓ Preparing nodes ✓ Configuring the external load balancer ⚖️ ✓ Creating kubeadm config ✓ Starting control-plane ️ ✓ Installing CNI ✓ Installing StorageClass ✓ Joining more control-plane nodes ✓ Joining worker nodes Cluster creation complete. You can now use the cluster with: export KUBECONFIG="$(kind get kubeconfig-path --name="test3")" kubectl cluster-info # kubectl get nodes NAME STATUS ROLES AGE VERSION test3-control-plane Ready master 7m44s v1.18.2 test3-control-plane2 Ready master 4m59s v1.18.2 test3-control-plane3 Ready master 2m18s v1.15.0 test3-worker Ready <none> 110s v1.15.0 test3-worker2 Ready <none> 109s v1.15.0 test3-worker3 Ready <none> 105s v1.15.0 常用操作