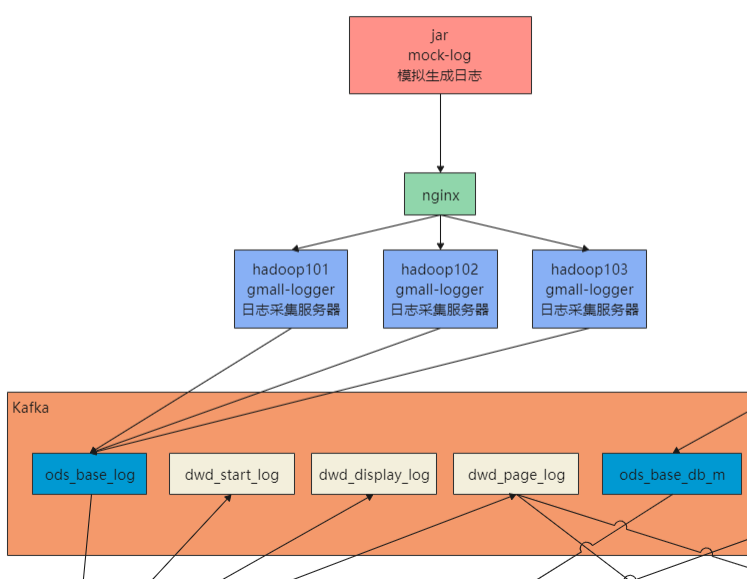

1.日志生成项目

日志生成机器:hadoop101

jar包:mock-log-0.0.1-SNAPSHOT.jar

gmall_mock

|----mock_common

|----mock_db

|----mock_log

项目地址:https://github.com/zhangbaohpu/gmall-mock

将模块mock_log打包成jar,并在同级添加application.yml

cd /opt/software/applog/

vim application.yml

点击查看代码# 外部配置打开 # logging.config=./logback.xml #业务日期 mock.date: "2020-12-18" #模拟数据发送模式 mock.type: "http" #http模式下,发送的地址 mock.url: "http://hadoop101:8081/applog" #mock: # kafka-server: "hdp1:9092,hdp2:9092,hdp3:9092" # kafka-topic: "ODS_BASE_LOG" #启动次数 mock.startup.count: 1000 #设备最大值 mock.max.mid: 20 #会员最大值 mock.max.uid: 50 #商品最大值 mock.max.sku-id: 10 #页面平均访问时间 mock.page.during-time-ms: 20000 #错误概率 百分比 mock.error.rate: 3 #每条日志发送延迟 ms mock.log.sleep: 100 #商品详情来源 用户查询,商品推广,智能推荐, 促销活动 mock.detail.source-type-rate: "40:25:15:20" #领取购物券概率 mock.if_get_coupon_rate: 75 #购物券最大id mock.max.coupon-id: 3 #搜索关键词 mock.search.keyword: "图书,小米,iphone11,电视,口红,ps5,苹果手机,小米盒子"然后启动项目

java -jar mock-log-0.0.1-SNAPSHOT.jar

默认端口8080,调用以下方法,会向接口:8081/applog 发送日志数据

点击查看代码import org.springframework.boot.SpringApplication; import org.springframework.boot.autoconfigure.SpringBootApplication; import org.springframework.context.ConfigurableApplicationContext; @SpringBootApplication public class GmallMockLogApplication { public static void main(String[] args) { ConfigurableApplicationContext context = SpringApplication.run(GmallMockLogApplication.class, args); MockTask mockTask = context.getBean(MockTask.class); mockTask.mainTask(); } } 2.日志采集项目日志处理机器:hadoop101,hadoop102,hadoop103

项目地址:https://github.com/zhangbaohpu/gmall-flink-parent/tree/master/gmall-logger

jar包:gmall-logger-0.0.1-SNAPSHOT.jar

项目中配置文件:application.yml

点击查看代码server: port: 8081 #kafka spring: kafka: bootstrap-servers: 192.168.88.71:9092,192.168.88.72:9092,192.168.88.73:9092 producer: key-serializer: org.apache.kafka.common.serialization.StringSerializer value-serializer: org.apache.kafka.common.serialization.StringSerializerLoggerController.java

接收日志,并把日志发送给kafka

点击查看代码import lombok.extern.slf4j.Slf4j; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.kafka.core.KafkaTemplate; import org.springframework.web.bind.annotation.RequestMapping; import org.springframework.web.bind.annotation.RestController; /** * @author zhangbao * @date 2021/5/16 11:33 **/ @RestController @Slf4j public class LoggerController { @Autowired KafkaTemplate kafkaTemplate; @RequestMapping("/applog") public String logger(String param){ log.info(param); kafkaTemplate.send("ods_base_log",param); return param; } } 3.nginx配置安装机器:hadoop101

修改nginx.conf配置,hadoop101作为负载均衡机器,hadoop101,hadoop102,hadoop103作为日志处理机器,nginx默认端口为80,主要配置如下:

点击查看代码#在 server 内部配置 location / { proxy_pass ; } #切记:在 server 外部配置反向代理 upstream { server hadoop101:8081 weight=1; server hadoop102:8081 weight=2; server hadoop103:8081 weight=3; } 4.将日志采集项目jar分发将日志采集jar包分发到其他机器,供nginx负载均衡转发调用

xsync gmall-logger-0.0.1-SNAPSHOT.jar

5.修改模拟日志生成的配置我们将生成的日子发送给nginx,然后在分发到其他采集日志的机器,生成日志的机器在hadoop101

cd /opt/software/applog/

vim application.yml

点击查看代码 #模拟数据发送模式 mock.type: "http" #http模式下,发送的地址 mock.url: "http://hadoop101/applog" 6.集群群起脚本将采集日志服务和nginx服务放在脚本中

在/home/zhangbao/bin创建脚本logger.sh

cd /home/zhangbao/bin,并授予执行权限

点击查看代码#!/bin/bash JAVA_HOME=http://www.likecs.com/opt/module/jdk1.8.0_144/bin/java APPNAME=gmall-logger-0.0.1-SNAPSHOT.jar case $1 in "start"){ for i in hadoop101 hadoop102 hadoop103 do echo "================$i=================" ssh $i "$JAVA_HOME -Xms32m -Xmx64m -jar /opt/software/applog/$APPNAME >/dev/null 2>&1 &" done echo "===============NGINX=================" /opt/module/nginx/sbin/nginx };; "stop"){ echo "===============NGINX=================" /opt/module/nginx/sbin/nginx -s stop for i in hadoop101 hadoop102 hadoop103 do echo "================$i===============" ssh $i "ps -ef|grep $APPNAME |grep -v grep|awk '{print \$2}'|xargs kill" >dev/null 2>&1 done };; esac 7.测试 hadoop101 hadoop102 hadoop103gmall_mock(生产日志) √

gmall-logger(采集日志) √ √ √

nginx √

kafka √ √ √

注意以下操作需要在linux的zhangbao用户下操作,因为这些组件是在此用户下安装,不然起不来,脚本都在hadoop101这台机器:

启动zookeeper

su zhangbao

zk.sh start

启动kafka

kf.sh start

然后本项目的jar包可以在root用户下操作

手动执行

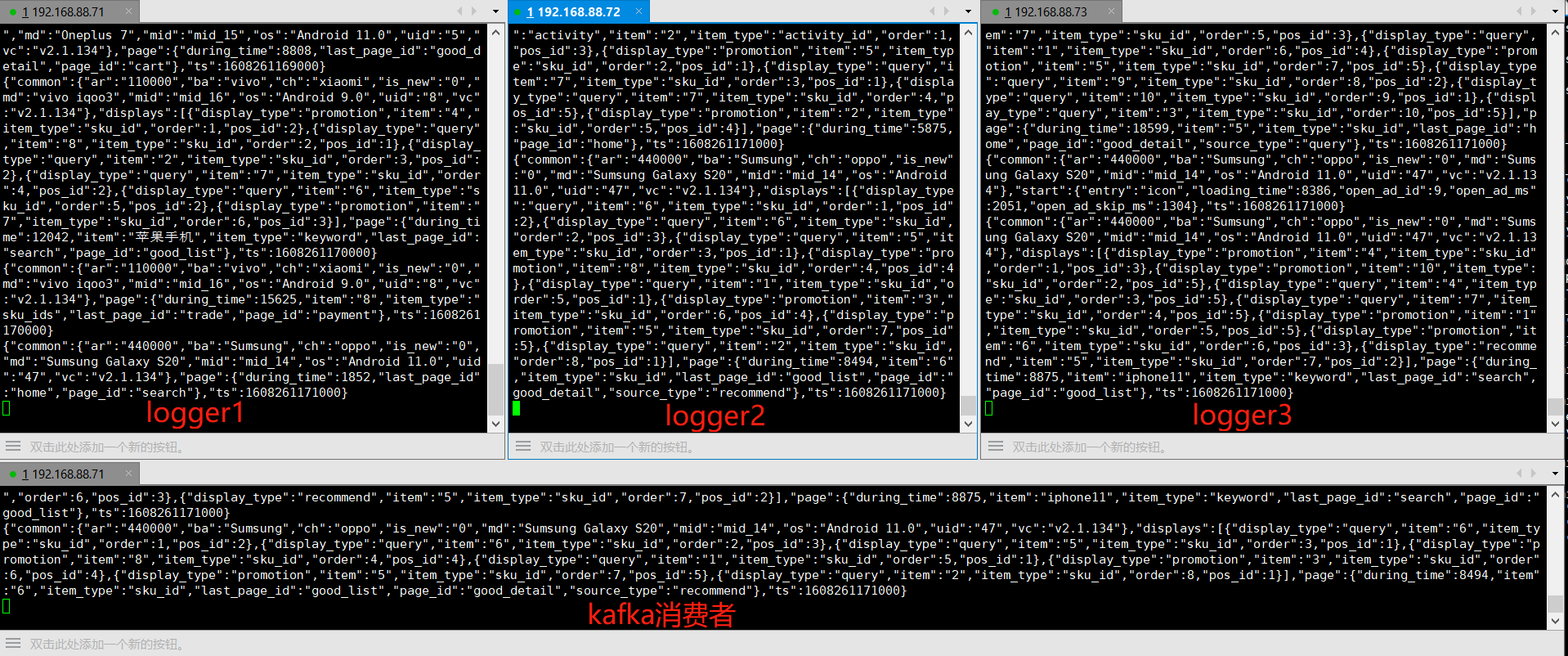

启动kafka消费者

kafka-console-consumer.sh --zookeeper hadoop101:2181,hadoop102:2181,hadoop103:2181 --topic ods_base_log

启动nginx

/opt/module/nginx/sbin/nginx,地址:

启动日志采集服务

日志采集服务做了负责均衡,分别在hadoop101,hadoop102,hadoop103

java -jar /opt/software/applog/gmall-logger-0.0.1-SNAPSHOT.jar

生产日志服务

生产日志服务在hadoop101,一台即可

java -jar /opt/software/applog/mock-log-0.0.1-SNAPSHOT.jar

使用脚本启停采集日志服务和nginx服务

./bin/logger.sh start

./bin/logger.sh stop