设计思路:Kafka消息生产程序发送testmsg到Kafka集群,Apache Beam 程序读取Kafka的消息,经过简单的业务逻辑,最后发送到Kafka集群,然后Kafka消费端消费消息。

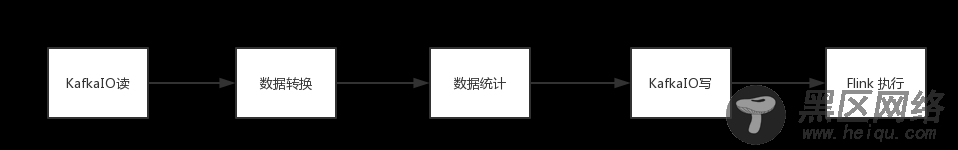

Apache Beam 内部数据处理流程图

Apache Beam 程序通过kafkaIO读取Kafka集群的数据,进行数据格式转换。数据统计后,通过KafkaIO写操作把消息写入Kafka集群。最后把程序运行在Flink的计算平台上。

软件环境和版本说明Kafka集群和Flink单机或集群配置,大家可以去网上搜一下配置文章,操作比较简单,这里就不赘述了。

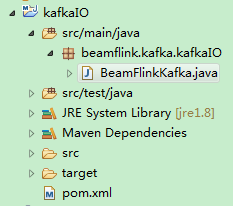

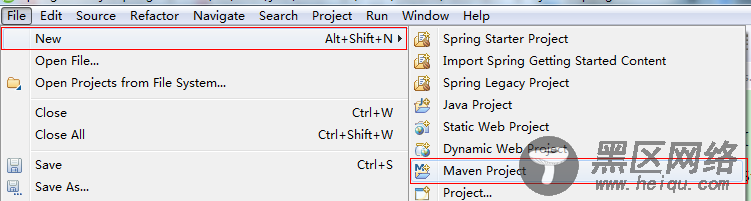

实践步骤1)新建一个Maven项目

2)在pom文件中添加jar引用

<dependency> <groupId>org.apache.beam</groupId> <artifactId>beam-sdks-java-io-kafka</artifactId> <version>2.4.0</version> </dependency> <dependency> <groupId>org.apache.kafka</groupId> <artifactId>kafka-clients</artifactId> <version>0.10.1.1</version> </dependency> <dependency> <groupId>org.apache.beam</groupId> <artifactId>beam-runners-core-java</artifactId> <version>2.4.0</version> </dependency> <dependency> <groupId>org.apache.beam</groupId> <artifactId>beam-runners-flink_2.11</artifactId> <version>2.4.0</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-java</artifactId> <version>1.5.2</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-clients_2.11</artifactId> <version>1.5.2</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-core</artifactId> <version>1.5.2</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-runtime_2.11</artifactId> <version>1.5.2</version> <!--<scope>provided</scope>--> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-java_2.11</artifactId> <version>1.5.2</version> <!--<scope>provided</scope>--> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-metrics-core</artifactId> <version>1.5.2</version> <!--<scope>provided</scope>--> </dependency>3)新建BeamFlinkKafka.java类