经过漫长的等待,终于扩容完成了:

$ redis-cli -h node1 -p 6379 cluster nodes 192.168.0.150:6379@16379 master - 0 1617337961000 14 connected 0-1364 5461-6826 10923-12287 192.168.0.130:6379@16379 master - 0 1617337967569 3 connected 12288-16383 192.168.0.120:6379@16379 myself,master - 0 1617337967000 12 connected 1365-5460 192.168.0.140:6379@16379 master - 0 1617337963000 13 connected 6827-10922 查看集群节点信息,发现他分配的并不是特别均匀,只要误差在2%以内,就算正常范围。

主从修改 如果你在线上生产环境中对Redis集群进行了扩容,一定要注意主从关系。

手动的对主从关系进行校正,这里不再进行演示。

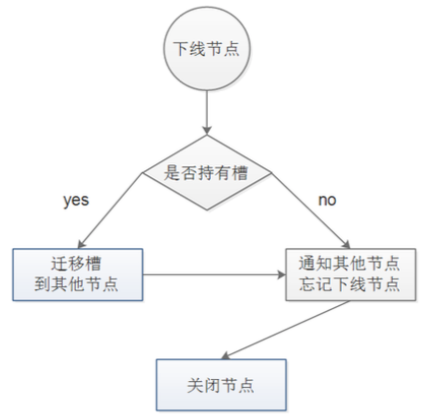

集群缩容 移除节点 下线节点时,节点如果持有槽必须指定将该槽迁徙到别的节点。

在槽迁徙时,数据也会一并迁徙,并不影响业务。

同时,当槽迁徙完成后,可在集群中对该节点进行遗忘。

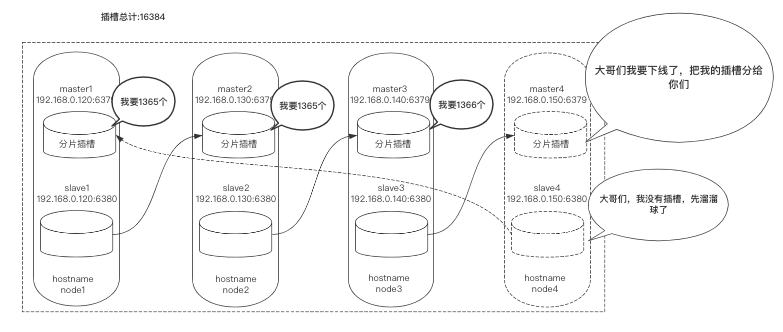

进行缩容 这里缩容对象还是node4:6379,它本身具有4096个插槽,我们需要分别把4096个插槽移动到node1:6379、node2:6379、node3:6379上。

计算每个节点分多少:

$ python3 >>> divmod(4096,3) (1365, 1) # 2个分1365 1个分1366 开始缩容,以下操作做3次:

$ cd /usr/local/redis_cluster/redis-6.2.1/src/ # 旧版Redis这里以脚本名开头 redis-trib.rb,并且不需要添加--cluster参数 $ redis-cli --cluster reshard 192.168.0.120 6379 >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. # 你想移动多少插槽?2次1365,1次1366 How many slots do you want to move (from 1 to 16384)? 1365 # 你想由谁来接收? node1:6379、node2:6379、node3:6379的node-id What is the receiving node ID? 282358c2fb0c7c16ec60f2c4043b52a0eb91e19f # 你想指定那个节点发送插槽? Please enter all the source node IDs. Type 'all' to use all the nodes as source nodes for the hash slots. Type 'done' once you entered all the source nodes IDs. # node4:6379的node-id Source node #1: d1ca7e72126934ef569c4f4d34ba75562d36068f Source node #2: done # 输入yes Do you want to proceed with the proposed reshard plan (yes/no)? yes 扩容完成后检查集群状态:

$ redis-cli -h node1 -p 6379 cluster info cluster_state:ok cluster_slots_assigned:16384 cluster_slots_ok:16384 cluster_slots_pfail:0 cluster_slots_fail:0 cluster_known_nodes:8 cluster_size:3 cluster_current_epoch:17 cluster_my_epoch:15 cluster_stats_messages_ping_sent:17672 cluster_stats_messages_pong_sent:26859 cluster_stats_messages_meet_sent:7 cluster_stats_messages_auth-req_sent:10 cluster_stats_messages_auth-ack_sent:2 cluster_stats_messages_update_sent:23 cluster_stats_messages_mfstart_sent:1 cluster_stats_messages_sent:44574 cluster_stats_messages_ping_received:17283 cluster_stats_messages_pong_received:24508 cluster_stats_messages_meet_received:5 cluster_stats_messages_fail_received:1 cluster_stats_messages_auth-req_received:2 cluster_stats_messages_auth-ack_received:5 cluster_stats_messages_mfstart_received:1 cluster_stats_messages_received:41805 查看node4:6379是否有未分配出去的插槽:

$ redis-cli -h node1 -p 6379 cluster nodes # 没有占据任何槽位、已被全部分配出去 d1ca7e72126934ef569c4f4d34ba75562d36068f 192.168.0.150:6379@16379 master - 0 1617349187107 14 connected f3dec547054791b01cfa9431a4c7a94e62f81db3 192.168.0.150:6380@16380 master - 0 1617349182874 0 connected 进行下线 现在就可以对node4:6379与node4:6380进行下线了,任意登录集群中某一节点,输入以下命令:

$ redis-cli -h node1 -p 6379 cluster FORGET d1ca7e72126934ef569c4f4d34ba75562d36068f $ redis-cli -h node1 -p 6379 cluster FORGET f3dec547054791b01cfa9431a4c7a94e62f81db3 检查是否以从节点中移除:

$ redis-cli -h node1 -p 6379 cluster nodes ff53e43f9404981a51d4e744de38004a5c22b090 192.168.0.130:6380@16380 slave 7a7392cb66bea30da401d2cb9768a42bbdefc5db 0 1617349417000 17 connected d645d06708e1eddb126a6c3c4e38810c188d0906 192.168.0.120:6380@16380 slave 282358c2fb0c7c16ec60f2c4043b52a0eb91e19f 0 1617349415310 16 connected 282358c2fb0c7c16ec60f2c4043b52a0eb91e19f 192.168.0.130:6379@16379 master - 0 1617349414244 16 connected 5461-6825 12288-16383 c71b52f728ab58fedb6e05a525ce00b453fd2f6b 192.168.0.120:6379@16379 myself,master - 0 1617349414000 15 connected 0-5460 7a7392cb66bea30da401d2cb9768a42bbdefc5db 192.168.0.140:6379@16379 master - 0 1617349416000 17 connected 6826-12287 6a627cedaa4576b1580806ae0094be59c32fa391 192.168.0.140:6380@16380 slave c71b52f728ab58fedb6e05a525ce00b453fd2f6b 0 1617349417761 15 connected 至此,node4:6379以及node4:6380成功下线。

主从修改 如果你在线上生产环境中对Redis集群进行缩容,一定要注意主从关系。

手动的对主从关系进行校正,这里不再进行演示。